![]()

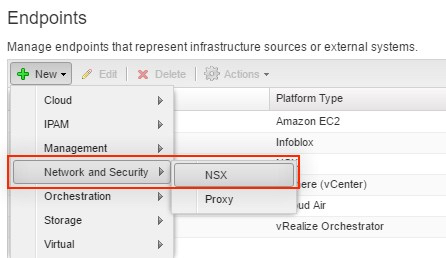

Continuing again on the same theme – Make the Private Cloud Easy – that we mentioned in the two previous blog post vRA 7.3 What’s New – Part 1 and What’s New – Part 2 we will continue to highlight more of the NSX integration Enhancements and for this part of the series we will be focusing on the Enhanced NAT Port Forwarding Rules.

So let’s get started Eh!

Enhanced NAT Port Forwarding Rules

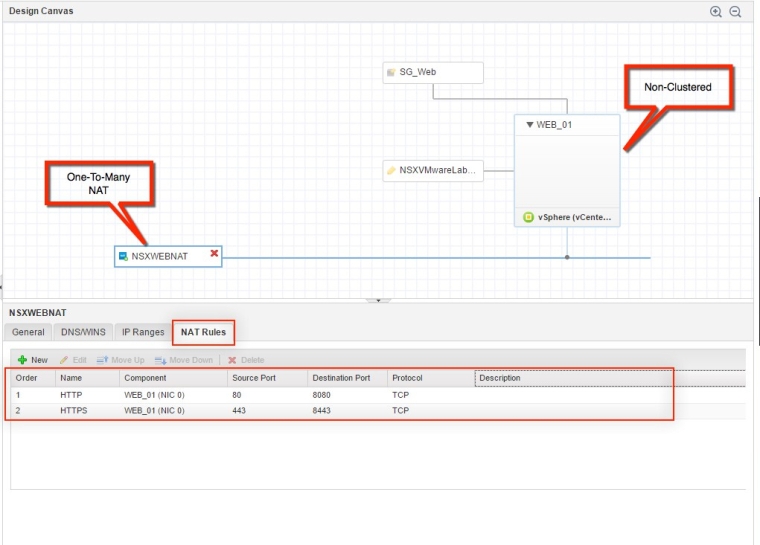

You now have the ability as you configure the On-Demand NAT Network in the CBP (Converged Blue Print) – to create forwarding NAT rules at design time, to a One-To-Many type NAT network component when you associate it with a Non-Clustered vSphere Machine component or an On-Demand NSX load balancer component.

You can define NAT rules for any NSX-supported protocol then map a port or a port range from (Source) the external IP address of an Edge to (Destination) a private IP address in the NAT network component.

These Rules can be set in a specific Order when configured at design time. it Also can be added, removed, and re-ordered after you create them for an existing deployment as a day-2 action/operation.

Important Notes:

- This will only work with One-To-Many type NAT network component, which means that One-To-One type NAT network component isn’t supported to create NAT rules for, in the CBP.

NAT Type One-to-Many

- Also the NAT network component can be only connected to a Non-Clustered vSphere Machine which means the number of configured instances for the vSphere Machine in the blueprint can’t be more than 1 for the instances minimum and maximum setting, a user can request for a deployment.

Non-Clustered

D-NAT Rules that can be Ordered

- If you must use a Clustered vSphere Machine, you have to leverage an On-demand load balancer if you want to create a NAT rule on One-To-Many type NAT network component that can be associated with the VIP network of the an NSX load balancer component.

Clustered Machine > 1 x Deployment

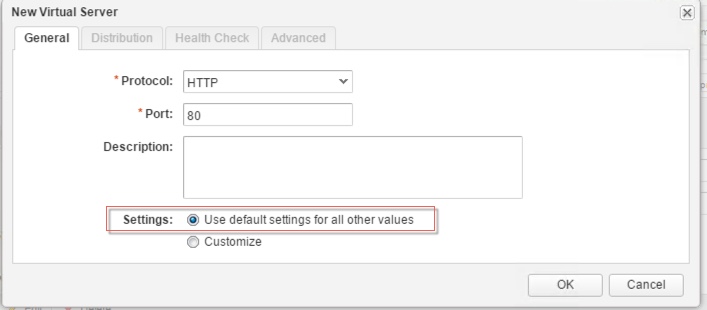

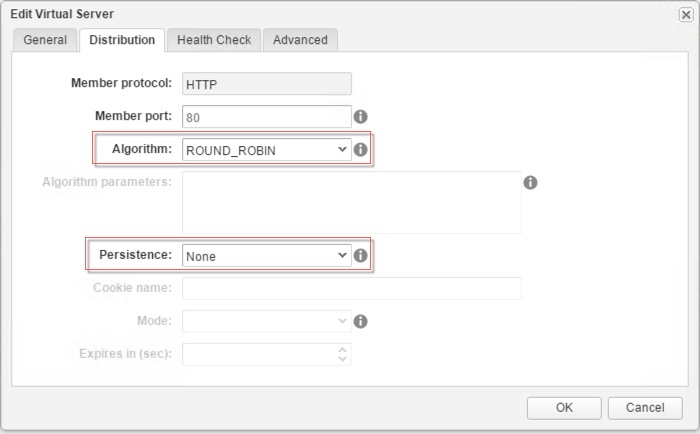

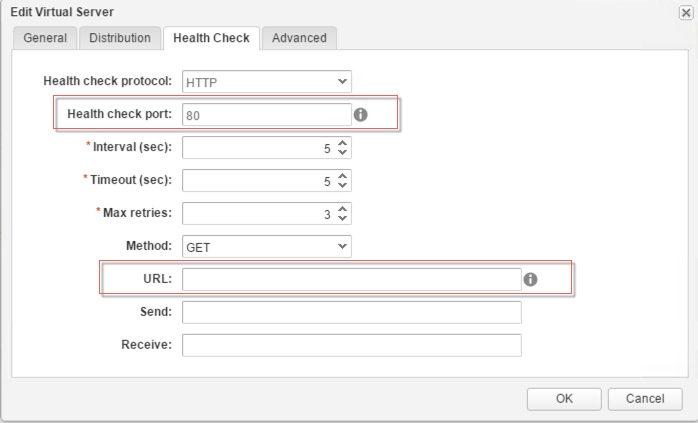

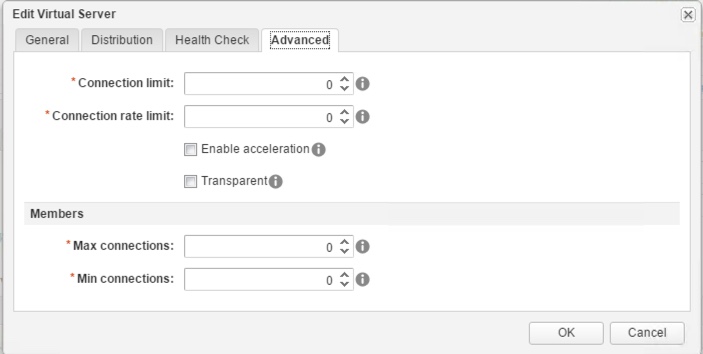

Load Balancer VIP settings depending on the network association

- In the above picture because that NAT rules are publishing HTTP-Port 80 and HTTPS-Port 443 on the external IP address of an Edge, then mapping those ports to the private IP and destination ports HTTP-Port 8080 and HTTPs-Port 8443 of the destination vSphere Machine and since the Load balancer VIP network is on the internal private network connected to NIC 0 of the clustered vSphere machines, we create the virtual servers on load balancer using HTTP-Port 8080 and HTTPs-Port 8443.

Again I really want to highlight the fact that the following elements are not supported for creating NAT rules:

- NICs that are not in the current network

- NICs that are configured to get IP addresses by using DHCP

- Machine clusters without the use of a Load balancer

- One-To-One type NAT network component

Change NAT Rules in a Exiting Deployment

Now after a successful deployment that includes 1 or more NAT forwarding rules, a user can later add, edit, and delete any existing NSX NAT rules in a deployed one-to-many NAT network. The user/owner can also change the order in which the NAT rules are processed just like how we showcased when you can do that during the design of the blueprint.

Important Notes :

- The Change NAT Rules operation is not supported for deployments that were upgraded or migrated from vRealize Automation 6.2.x to this vRealize Automation release.

- You cannot add a NAT rule to a deployment that is mapped to a third-party IPAM endpoint such as Infoblox.

a user must log in to vRA as a machine owner, support user, business group user with a shared access role, or a business group manager to be entitled to change a NAT rules in a network.

Once that is verified, a user can :

- Select Items > Deployment.

2. Locate the deployment and display its children components.

3. Select the NAT network component to edit.

4. Click Change NAT Rules from the Actions menu.

5. Add new NAT port forwarding rules, reorder rules, edit existing rules, or delete rules. What ever makes you happy!!

6. When you have finished making changes, click Save or Submit to submit the reconfiguration request.

7. Check the status of your request under the Request Tab, that it is successful.

8. In my case i have simply changed the order where I placed the HTTPS forwarding NAT rule to apply first. so you if you click on the Request ID after its successfully complete you will see just that.

This was short and sweet, hope you enjoyed it. Now go give it a shot.

The End Eh!

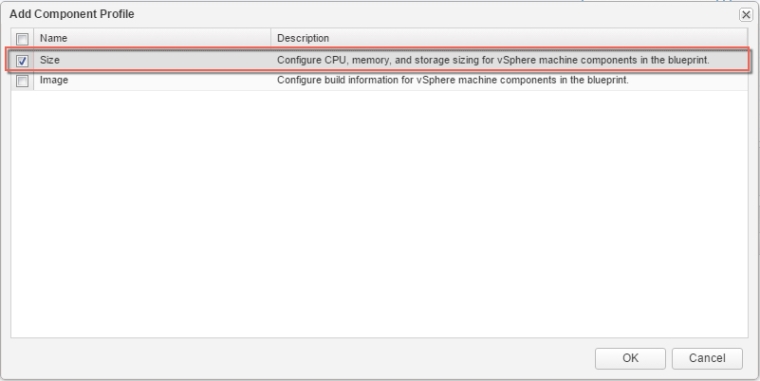

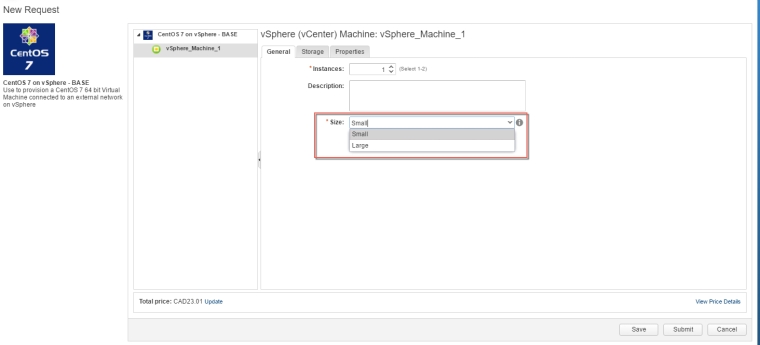

4. Click the Value Sets tab and define a new value set by clicking New to create a small and a large size deployment value set for example.

4. Click the Value Sets tab and define a new value set by clicking New to create a small and a large size deployment value set for example.