vSphere Integrated Containers Engine is a container run-time for vSphere, allowing developers familiar with Docker to develop in containers and deploy them alongside traditional VM-based workloads on vSphere clusters, and allowing for these workloads to be managed through the vSphere UI in a way familiar to existing vSphere admins.

vSphere Integrated Containers comprises three major components:

- vSphere Integrated Containers Engine, a container runtime for vSphere that allows you to provision containers as virtual machines, offering the same security and functionality of virtual machines in VMware ESXi™ hosts or vCenter Server® instances.

- vSphere Integrated Containers Registry, an enterprise-class container registry server that stores and distributes container images. vSphere Integrated Containers Registry extends the Docker Distribution open source project by adding the functionalities that an enterprise requires, such as security, identity and management.

- vSphere Integrated Containers Management Portal, a container management portal that provides a UI for DevOps teams to provision and manage containers, including the ability to obtain statistics and information about container instances. Cloud administrators can manage container hosts and apply governance to their usage, including capacity quotas and approval workflows.

These components currently support the Docker image format. vSphere Integrated Containers is entirely Open Source and free to use. Support for vSphere Integrated Containers is included in the vSphere Enterprise Plus license.

Now that we are done with the intro, we will only be focusing on VIC Engine in this post and how we can leverage vRealize Automation 7.x to make it even better and faster to deploy by users as a service.

I have been playing with vSphere Integrated Containers for a while now and since the early beta days. I can tell you that deploying and deleting the VCH Endpoint so many time was a bit painful since the command line is so rich including so many parameters that you can choose from where some are mandatory and some are optional, which of course can be a bit overwhelming specially when you fat finger some of these parameters as often as I do.

Example of the Linux command line with some of its parameters to deploy a Virtual Container Host on vSphere, looks something like this :

./vic-machine-linux create –name VCH_Name -t ‘UserName@domain.com:Password@vCener_IP_or_FQDN‘ –compute-resource Target_Cluster –public-network Target_Managment_Network –bridge-network Target_Bridge_Network –image-store DataStore_Name –volume-store DataStore_Name :default –dns-server DNS_IP_Or_FQDN –public-network-ip VCH_IP –public-network-gateway Gateway_IP/CIDR–force –no-tlsverify

During all this testing time I had to save the entire command line in a text file with all of its parameters, so I can simply copy and past the command when I need to, after replacing some of these parameters with the values I wanted to use, so I don’t have to type it over and over every single time I decide to deploy or delete a Virtual Container Host to test.

Having in mind our main use case for vRealize Automation and that is IT Automating IT , I wanted to find a way where I can somehow provide this as a service in my home lab where I can simply select the service and submit the request from the catalog.

Well, I did that some time ago and today I m excited to share that publicly on my new blog with all of you out there !

So please sit tight, enjoy the ride as I Explain…

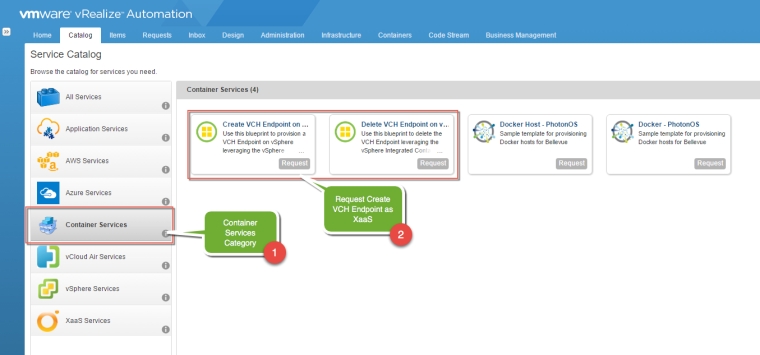

In vRealize Orchestrator I managed to leverage the Guest Script Manager to take the command line with the majority of its parameters and automate the life out of it by creating the desired workflows use cases ( The Creation and Deletion of the VCH process ) then use these workflows as Anything as a service XaaS type blueprints in vRA to essentially present it as an item catalog where users can easily request to create a new VCH or delete an existing one.

Of course there are many other ways on how you can do the VCH automation piece and probably even better than the one I’m sharing here, but this is simply how I did it!.

Steps and User Experience

1. Request the Service from the Catalog

2. Provide the VIC Machine Information

3. Provide the targeted vCenter Server

4. Provide the VCH Configuration needed for the deployment

5. Workflow executes in vRO to deploy the VCH endpoint on vSphere

You can also wrap an approval / governance policy around it which vRA can easily provide and have all the parameter’s values available to users in drop-down list format within the XaaS Forms on the request page, so the requester don’t have to wonder when filling out these form requests, things like which cluster I should be deploying this to, What network I should be selecting, What Storage I should use and more importantly standardize these inputs to avoid typos to standardize the service overall so its consistent across the IT organization.

I tested both XaaS blueprints ( Create and Delete VCH ) and both works like a charm. I still though have to clean it up a bit but I will be sharing both the vRO package and the XaaS blueprints here on this post so others can use it or build on top of it and make it even better since I am not really an expert when it comes to developing vRO workflow but I m doing my best to learn even more.

High level Deployment Guide

Please be aware that this has not been tested yet outside my lab, so please provide feedback if you have any issues, in case I need to tweak things :

- Download the VCH 1.0 (Here) or VCH 1.1 (Here) Automation package depending on the VIC version bundle you have or planning to download and extract its content. The package includes the vRO package that includes the VCH workflows and the 2 XaaS VCH Blueprints for the VCH Create and Delete operation.

- If you download the 1.0 VIC bundle (Here) make sure its extracted to /workspace/vic on the desired VIC machine (The Machine that host the VIC Bundle), here you will use the VCH 1.0 in step 1.

- If you download the 1.1 VIC bundle (Here) make sure its extracted to /workspace/vic on the desired VIC machine (The Machine that host the VIC Bundle), here you will use the VCH 1.1 in step 1.

- Import the vRO package into the vRA embedded vRO instance using the vRO client

- Use the Cloud Client (Here) to import the two XaaS Blueprints into vRA where you can then publish and entitle them to users.

- Confirm that the blueprints are actually pointing to the respective VCH workflows that you imported perilously.

Please make sure to map the right VCH Automation package version with the right VIC Bundle version since some of the command syntax changed in VIC 1.1

Important Deployement Notes

- This was done using the Guest Script Manager as I mentioned before which is already bundled in the VCH 1.0 vRO package along with the VCH workflows in the vRO package I Exported, so you don’t have to install the GSM yourself.

- All the fields for this version is mandatory and can’t be skipped for now, but its something that you can definatly modify if you want to.

- All the fields are static, so later on you can configure some of the field’s in XaaS forms as drop-down lists and provide value from you own environment such as Clusters Name, Network port-groups or storage..etc

- The Workflow will deploy VCH with Server-side authentication with auto-generated, untrusted TLS certificates that are not signed by a CA, with no client-side verification. i.e. –no-tlsverify is hard coded as you will see in the create command mentioned below.

- You have VIC bundle deployed and extracted to a folder called /workspace/vic/ on a Linux machine called out in the XaaS forms as the VIC Machine VM available within the same vCenter/environment. This can be the vRA appliance as well and you can modify the original Workflow to preset the values for the VIC machine properties section (2nd Screenshot above) so the user don’t even have to select it or go through the first request tab.

- The VCH deployment can be used and manually added in Admiral using the certificate type credentials which can be obtained from the VIC Machine from the VCH folder created after a successful deployment . for example if you deploy an endpoint called VCH01, both the server-cert.pem and server-key.pem would be located in /workspace/vic/VCH01 folder on the VIC Machine.

- This is the command line that being executed on the VIC Machine VM ( which is the VM that has the VIC bundle deployed and extracted to /workspace/vic ) . All the parameters that are used between vRA and vRO are in-between brackets.

The Create Command Used in the Create Workflow for VIC 1.0

./vic-machine-linux create --name [vchName] --appliance-cpu [vchCpu] --appliance-memory [vchMem] -t '[vCenterUserName]:[password]@[vCenterIp]' --compute-resource '[clusterName]' --public-network [publicNetwork] --bridge-network [bridgeNetwork] --image-store [imageStore] --volume-store [volumeStore]:[volumeName] --dns-server [dnsServerIp] --public-network-ip [vchPublicIp] --public-network-gateway [vchPublicGateway]/[vchPublicGatewaySubnet] --force --no-tlsverify

The Create Command Used in the Create Workflow for VIC 1.1

./vic-machine-linux create --name [vchName] --endpoint-cpu [vchCpu] --endpoint-memory [vchMem] -t '[vCenterUserName]:[password]@[vCenterIp]' --compute-resource '[clusterName]' --public-network [publicNetwork] --bridge-network [bridgeNetwork] --image-store [imageStore] --volume-store [volumeStore]:[volumeName] --dns-server [dnsServerIp] --public-network-ip [vchPublicIp]/[vchPublicIpSubnet] --public-network-gateway [vchPublicGateway] --force --no-tlsverify

You notice if you compare the create command between the two versions that some of the parameters were changed. i.e. –appliance-cpu renamed to –endponit-cpu

The Delete Command Used in the Delete Workflow is same for both versions

./vic-machine-linux delete --force -t '[vCenterUserName]:[password]@[vCenterIp]' --compute-resource '[clusterName]' --name [vchName]

Have fun Everyone!